Sudden and huge growth of Windmill's DB

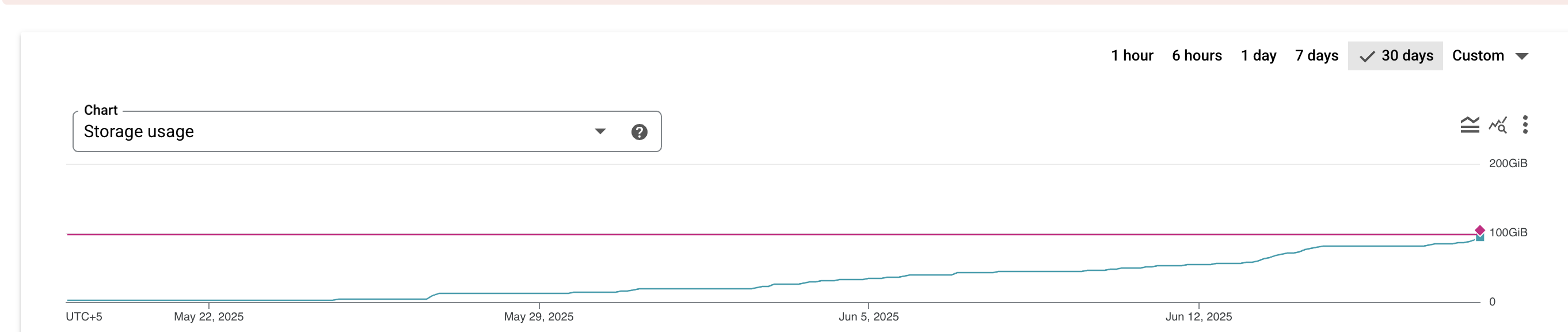

I noticed just today that starting from about a month ago, our Windmill's CloudSQL instance started to grow in disk usage from 3-4 GBs to 90-100 GBs all of a sudden.

I know, this is not entirely related to Windmill per se, but maybe, based on your experience, there are some specific things that can trigger this growth and that I should take a look at first of all?

Thanks!

I know, this is not entirely related to Windmill per se, but maybe, based on your experience, there are some specific things that can trigger this growth and that I should take a look at first of all?

Thanks!