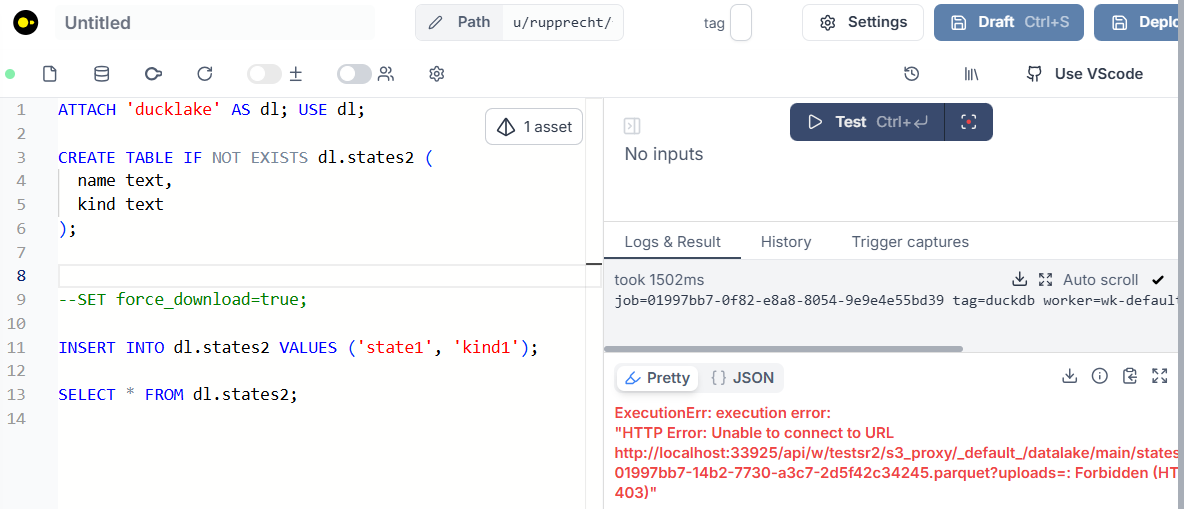

Error Ducklake: Writing parquet file fails.

ExecutionErr: execution error:

"HTTP Error: Unable to connect to URL http://localhost:36867/api/w/testsr2/s3_proxy/_default_/datalake/main/states2/ducklake-01997aa2-1513-75ee-a66e-ff5a6e3d9aa3.parquet?uploads=: Forbidden (HTTP code 403)"

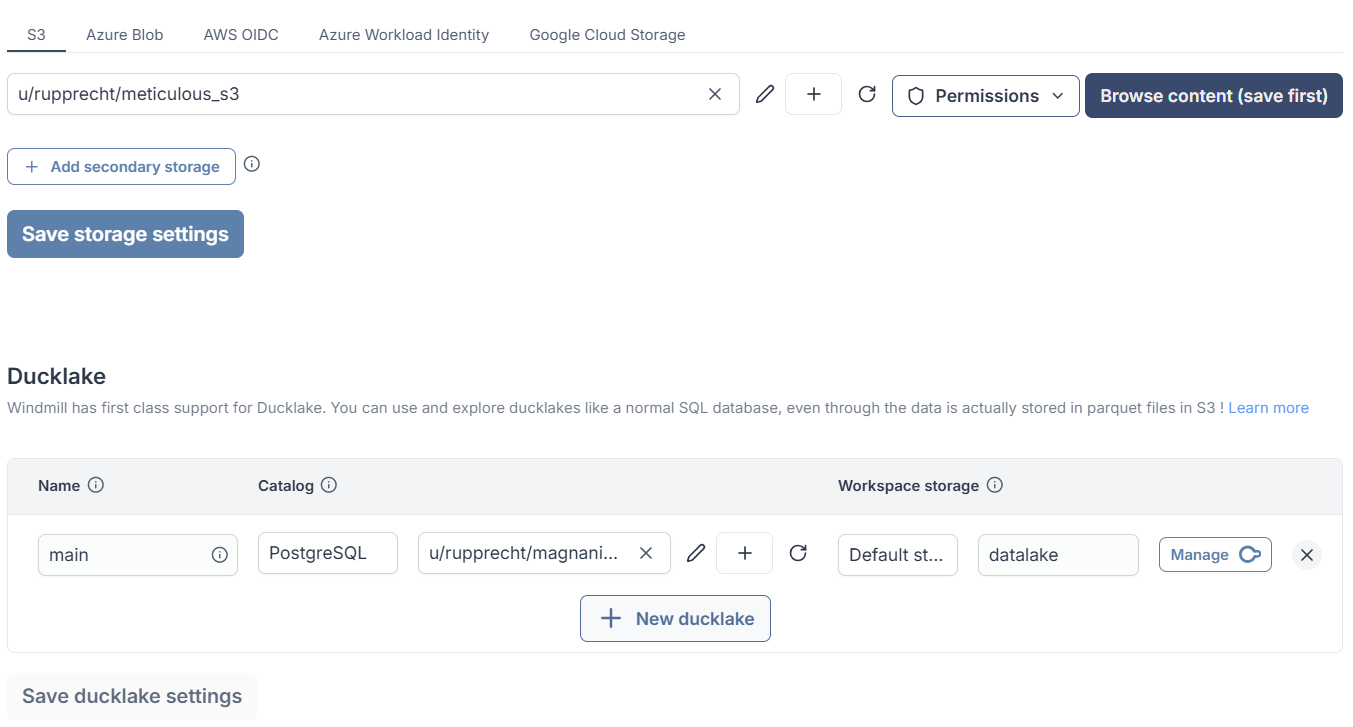

Metadata within Postgres (Neon) looks good, S3 Browser up- and download looks good. Using a stand alone DuckDB / Ducklake with same S3 and Postgres infrastructure works just fine, so I think, something is wrong with my Windmill configuration.

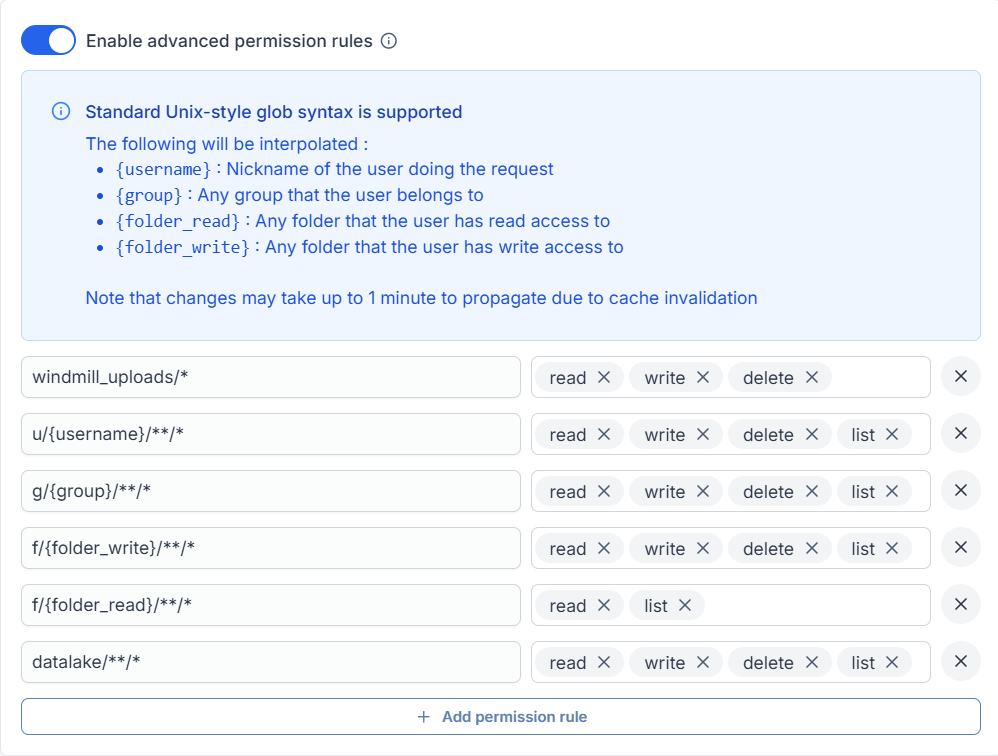

Has anybody had similar problems? Does the s3_proxy translate "default"? Do I have to set special permissions? I have tried legacy permissions and new advanced permissions.

Can somebody give me guidance on how to diagnose the issue further? I am stuck, therefore any help is appreciated. Thank you.

I am running self hosted docker version v1.547 with EE image and license on a Windows machine.

"HTTP Error: Unable to connect to URL http://localhost:36867/api/w/testsr2/s3_proxy/_default_/datalake/main/states2/ducklake-01997aa2-1513-75ee-a66e-ff5a6e3d9aa3.parquet?uploads=: Forbidden (HTTP code 403)"

Metadata within Postgres (Neon) looks good, S3 Browser up- and download looks good. Using a stand alone DuckDB / Ducklake with same S3 and Postgres infrastructure works just fine, so I think, something is wrong with my Windmill configuration.

Has anybody had similar problems? Does the s3_proxy translate "default"? Do I have to set special permissions? I have tried legacy permissions and new advanced permissions.

Can somebody give me guidance on how to diagnose the issue further? I am stuck, therefore any help is appreciated. Thank you.

I am running self hosted docker version v1.547 with EE image and license on a Windows machine.